TL;DR

Suppose we have a model $f:\mathbb{R}^n \to \mathbb{R}$ that takes a vector of $n$ inputs. You might imagine our model is a classifier that operates on MNIST and outputs the probability that the image is an $8$. It takes in a vector of inputs corresponding to patches in a black-and-white image of a hand-drawn digit. A natural question is what percentage of the variance in the probability of an image being an eight can be attributed to a particular patch. This is the question that Sobol's method answers.

1. The Setup

We assume that our inputs are independent and distributed uniformly at random. Without loss of generality, let's assume that our inputs live on the $ n$-dimensional hypercube.

We expand $f$ as a sum of orthogonal components:

$$f(X_1,\dots,X_n) = f_0 + \sum_{i=1}^n f_i(X_i) + \sum_{i<j} f_{ij}(X_i,X_j) + \sum_{i<j<k} f_{ijk}(X_i,X_j,X_k) + \cdots + f_{1\,2\,\dots n}(X_1,\dots,X_n).$$

2. How We Get the Components

Start with the mean term:

$$f_0 = \mathbb{E}[Y].$$

The first–order (main effect) component for $X_i$ is

$$f_i(X_i) = \mathbb{E}[Y\mid X_i] - f_0.$$

The second–order interaction between $X_i$ and $X_j$ is

$$f_{ij}(X_i,X_j) = \mathbb{E}[Y\mid X_i,X_j] - f_i(X_i) - f_j(X_j) - f_0.$$

And so on: each higher‑order term subtracts all lower‑order pieces so that every component has mean zero and is orthogonal to all lower‑order components. (By the law of total expectation—often jokingly called “Adam’s law”—each $f_{S}$, for $S\subseteq\{1,\dots,n\}$ with $|S|\ge 1$, satisfies $\mathbb{E}[f_S]=0$.) Each component is obtained by projecting $f$ onto the subspace of interaction functions depending only on a specified subset of inputs.

3. Conditional Expectation as Projection

Think of all functions of $X_i$ as a subspace of $L_2$. The conditional expectation $\mathbb{E}[Y\mid X_i]$ is the orthogonal projection of $Y$ onto that subspace. Therefore

$$f_i = \mathbb{E}[Y\mid X_i] - \mathbb{E}[Y]$$

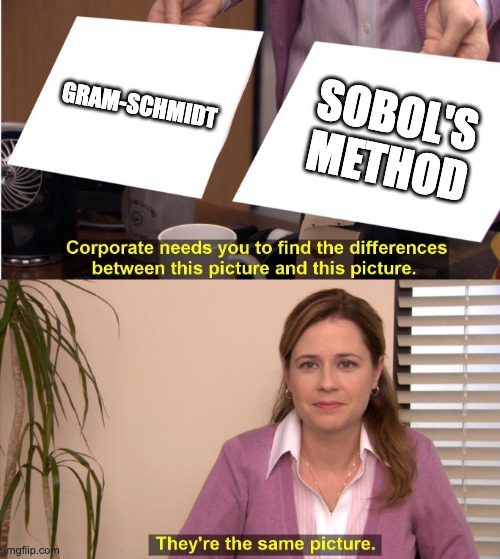

is orthogonal to the constant subspace (spanned by $f_0$). This is directly analogous to the Gram–Schmidt process: each time we subtract projections onto already–constructed orthogonal components, producing a new piece that is orthogonal to all previous ones. The sum of all components still equals $f(X)$ exactly—no information is lost. In fact, it's not just analogous. It *is* Gram-Schmidt in the Hilbert space $L_2$ with inner product $L_2$ with inner product $\langle U,V\rangle = \mathbb{E}[UV]$, where conditional expectations play the role of orthogonal projections. In the end, the variance of $Y=f(X)$ splits into uncorrelated pieces attributable to individual variables and their

4. Decomposing the Variance

Orthogonality implies uncorrelatedness. Squaring and integrating (i.e., taking $L_2$ norms), we obtain a variance decomposition. Write the index set of inputs as $[n]=\{1,\dots,n\}$ and let $S\subseteq [n]$ denote a subset. Then

$$\operatorname{Var}(Y) = \sum_{\emptyset \neq S \subseteq [n]} V_S,$$

where, for singletons,

$$V_i = \operatorname{Var}_{X_i}\big( \mathbb{E}[Y \mid X_i] \big),$$

and for pairs,

$$V_{ij} = \operatorname{Var}_{X_i,X_j}\big( \mathbb{E}[Y \mid X_i, X_j] \big) - V_i - V_j,$$

and, in general,

$$V_S = \operatorname{Var}_{X_S}\big( \mathbb{E}[Y \mid X_S] \big) - \sum_{T \subsetneq S} V_T.$$

Equivalently, because the $f_S$’s are orthogonal,

$$

\operatorname{Var}(Y)

= \sum_{\emptyset\neq S\subseteq [n]} \|f_S\|_{L^2}^2

= \sum_{s=1}^n \sum_{i_1<\cdots

Visualizing the Projections

References & Further Reading

- Sobol, I. M. (1993). Sensitivity estimates for nonlinear mathematical models.

- Saltelli et al. (2008). Global Sensitivity Analysis: The Primer.

- Any standard text on $L_2$ spaces, conditional expectation, and Gram–Schmidt.